I think I have to debayer my data but I’m not sure if I’m doing this correctly. Sensor_modes (int) : min=0 max=30 step=1 default=30 value=3 flags=read-only Sensor_dv_timings (u32) : min=0 max=0 step=0 default=0 flags=read-only, has-payload Sensor_control_properties (u32) : min=0 max=0 step=0 default=0 flags=read-only, has-payload Sensor_image_properties (u32) : min=0 max=0 step=0 default=0 flags=read-only, has-payload Sensor_signal_properties (u32) : min=0 max=0 step=0 default=0 flags=read-only, has-payload Write_isp_format (int) : min=1 max=1 step=1 default=1 value=1 Size_align (intmenu): min=0 max=2 default=0 value=0 Height_align (int) : min=1 max=16 step=1 default=1 value=1 Override_enable (intmenu): min=0 max=1 default=0 value=0 Gain (int) : min=256 max=90624 step=1 default=256 value=256 flags=sliderīypass_mode (intmenu): min=0 max=1 default=0 value=0 Hdr_enable (intmenu): min=0 max=1 default=0 value=0 Group_hold (intmenu): min=0 max=1 default=0 value=0

“cv_frame” returns all zeros.Īfter running v4l2-ctl -d /dev/video1 -list-formats-extįrame_length (int) : min=1551 max=45714 step=1 default=4291 value=4291 flags=sliderĬoarse_time (int) : min=1 max=45704 step=1 default=4281 value=4281 flags=sliderĬoarse_time_short (int) : min=1 max=45704 step=1 default=4281 value=4281 flags=slider The two channels on “frame” (frame and frame) show nothing but static looking images, but this responds in real time. lect((video,), (), ()) # Wait for the device to fill the buffer.įrame = np.frombuffer(image_data, dtype=np.uint8).reshape(1920,1080,2)Ĭv_frame = cv2.imdecode(frame, cv2.COLOR_BAYER_RG2BGR)

Video = v4l2capture.Video_device("/dev/video1") I have also tried using the v4l2capture library in python using the code: import numpy as np There is no lag running gst-launch-1.0 nvcamerasrc fpsRange="15.0 15.0" sensor-id=1 ! 'video/x-raw(memory:NVMM), width=(int)4056, height=(int)3040, format=(string)I420, framerate=(fraction)15/1' ! nvtee ! nvvidconv flip-method=2 ! 'video/x-raw, format=(string)I420' ! xvimagesink -eīut when I try to run this in opencv: cv2.VideoCapture("gst-launch-1.0 nvcamerasrc fpsRange='15.0 15.0' sensor-id=1 ! video/x-raw(memory:NVMM), width=(int)4056, height=(int)3040, format=(string)I420, framerate=(fraction)15/1 ! nvtee ! nvvidconv flip-method=2 ! video/x-raw, format=(string)I420 ! xvimagesink -e")Ĭap.read() returns a None type. I do not want to use gstreamer to convert my video to BGR because that causes some lag in the video, but it works: cv2.VideoCapture("nvcamerasrc fpsRange='1.0 60.0' sensor-id=1 ! video/x-raw(memory:NVMM), width=(int)1920, height=(int)1080, format=(string)I420, framerate=(fraction)15/1 ! nvvidconv flip-method=6 ! video/x-raw, format=(string)I420 ! videoconvert ! video/x-raw, format=(string)BGR ! appsink")

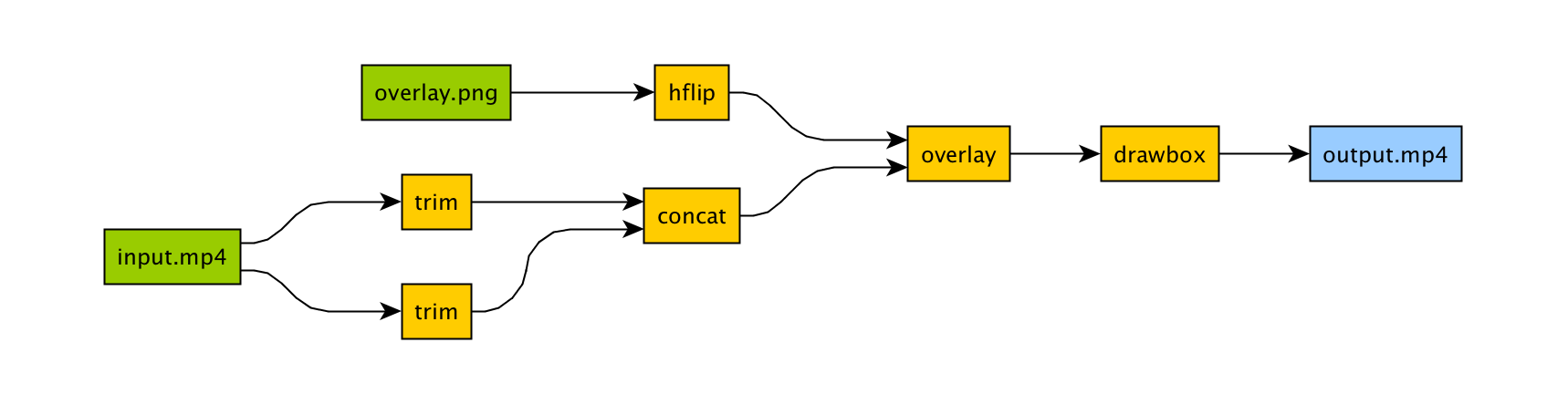

PENCV PYTHON FFMPEG CODE

This is the code I run to reproduce this error: import cv2 VIDEOIO ERROR: V4L: can't open camera by index 1 VIDEOIO ERROR: V4L2: Pixel format of incoming image is unsupported by OpenCV

0 kommentar(er)

0 kommentar(er)